Voice cloning scams are a growing threat. Here's how you can protect yourself.

NEW YORK -- Voice cloning scams are a growing threat, but there are steps you can take to protect yourself.

Websites powered by artificial intelligence now allow anyone to clone the voice of your loved one -- but scammers aren't just impersonating family members and friends.

Data from the Federal Trade Commission shows in the past four years, scams involving business imposters have been on the rise. Last year, more than $752 million was lost.

How easy is it to clone someone's voice?

CBS New York's Mahsa Saeidi set out to find out what it takes to clone a voice.

After a quick Google search, Saeidi found an AI-powered website and paid $5 to use its voice cloning service.

Next, she needed a 30-second audio clip of the voice she wanted to replicate. CBS New York's investigative executive producer loaned his voice, but Saeidi also could've pulled it from his social media.

With just those few simple steps, she was able to make his AI-generated voice say whatever she typed.

The whole process, from the time it took to create the account to generating the cloned voice, took about two to four minutes.

The startup behind the website told Saeidi the technology can be used to narrate a book or give a voice to those without one.

To combat misuse, the company says users can upload audio clips onto their website to see if it was generated by the company.

What's being done to protect Americans from voice cloning scams?

Scammers can pull your voice from videos posted to social media or your voicemail message, or they can call you and record you, so it's hard to fully protect yourself.

"Are regulators doing enough to protect us?" Saeidi asked Congresswoman Yvette D. Clarke.

"We're trying to keep pace with the evolution of technology and we aren't doing enough," Clarke said.

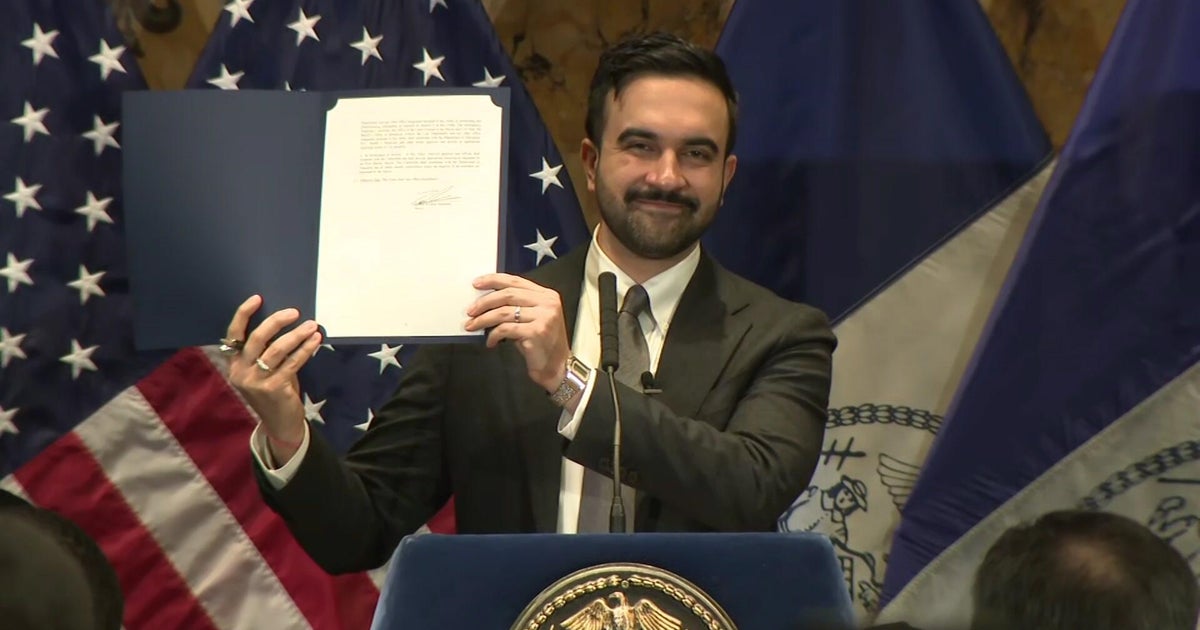

Clarke represents New York's Ninth District and is on the United States House of Representatives AI task force. She's introduced legislation that requires companies to digitally watermark AI-generated content. If the bill passes, consumers would be warned they're listening to a fake, and if somehow they're harmed by the fake content, they could sue.

"It's gonna be very difficult for the average human being to discern what is real from what has been AI-generated ... Time is not on our side," Clarke said.

The startup Saeidi used to generate synthetic audio said they "very much support the introduction of regulation which will help establish the origins of digital content, including watermarking." They say you should be able to identify where synthetic audio is coming from, and they're already working to create that technology. They believe they will be setting the industry standard for how synthetic audio is identified.

As regulators try to catch up to scammers, Queens District Attorney Melinda Katz says it's up to you to defend yourself.

"If you can simulate someone's voice, there is no saying how far that can go," Katz said.

Law enforcement says you should create a secret code word and share it with family members in person. In an emergency, the code word will help you verify who's on the other line.

Officials also say, if anyone asks you for money or sensitive information, pause.

"Honor your senses, trust your gut, trust your judgment and doublecheck," Katz said.

Attorney nearly fooled by AI scam: "I knew it was my son"

Gary Schildhorn, an attorney experienced in fraud, nearly fell victim to a voice cloning scam himself.

"To me, it's nonsensical ... We allow these tools to be out there that can be used by scammers without risk of harm," he said.

Schildhorn says he jumped into action mode to bail out his son, Brett, after an apparent crash.

"He said, 'Dad, I'm in trouble, I got in an accident. I think I broke my nose. I hit a pregnant woman. They arrested me, I'm in jail. You need to help me.' I said, 'Brett, of course I'll help you,'" he said.

"Did you think it was your son?" Saeidi asked.

"I knew it was my son. There was no question in my mind," Schildhorn said.

Within two minutes, Schildhorn believed he had spoken with three different people -- his son, a defense attorney and a court clerk. As he drove to the bank to withdraw $9,000 in cash, he updated his son's wife. Then Brett called him on FaceTime.

"He said, 'Dad, my nose is fine. I'm fine. You're being scammed,'" Schildhorn said.

Because he hadn't lost money, he had no legal recourse.

Schildhorn contacted law enforcement, who were aware of the so-called family emergency scam, but he says they told him the criminals were untraceable because their scam uses burner phones to get cryptocurrency.

Despite his wife's concerns, Schildhorn went public, eventually testifying before a Senate committee on AI and fraud.

"In this case, there is no remedy ... and I hope that this committee can do something about that," he said.