Faceoff against Facebook: Stopping the flow of misinformation

When David Pogue interviewed Facebook founder Mark Zuckerberg in 2005, the company was just getting off the ground. "We've gone from having around 150,000 people in the fall to right around three million now," Zuckerberg said. "People use the site so much that it's creating a marketplace for advertising."

It was still called TheFacebook.com. It was still limited to college students, and it was still a little bit casual. Catching one of Facebook's employees crashing on a couch, Zuckerberg said, "Dude, what's up? Dude, you're on TV."

How did that Facebook become the object of criticism from Sen. Ed Markey (D-Mass.) who, at a Senate subcommittee hearing last Thursday, said, "Facebook is just like Big Tobacco, pushing a product that they know is harmful"?

Or the subject of stories like this Sept. 14 Wall Street Journal article: Facebook knows Instagram is toxic for teen girls, company documents show; or a report that stated Facebook's algorithms push divisive content, because it pushes engagement?

Even President Biden got involved: "Anyone listening to it is getting hurt by it," he said. "It's killing people!"

He was referring to one of Facebook's most burning problems: misinformation, like posts saying that the COVID vaccine causes miscarriages, or that the FDA is tracking unvaccinated people, or that the vaccine is the "Mark of the Beast."

None of that is true. But people really are dying from misinformation.

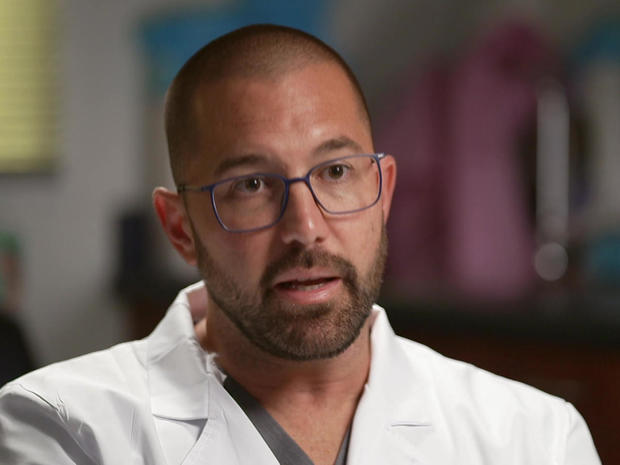

Pogue asked Adriano Goffi, a medical director of the Altus Health System near Houston, "How often do you see somebody die of COVID?"

"Almost every shift," he replied.

His emergency rooms have been overrun with desperately sick, unvaccinated COVID patients. He showed Pogue a massive binder representing patients just from August, most of whom refused the vaccine because they'd read bad information on social media.

Goffi said, "About 80 percent would come from Facebook; 'I read on Facebook it's poison, it's got tracking devices in me, it makes cows sterile.' I've heard all kinds of things."

Pogue asked, "When you encounter somebody like that, where are you on the scale of, 'This person's an idiot,' or, 'I feel so sorry for this person that they're this brainwashed'?"

"I feel really bad for individuals, because if that is your source, right, it's hard for them to separate reality and what is being fed to them," he replied.

"Do you have any impression of how reading this misinformation online affects the mindset of a patient?"

"It's very powerful that you can see it and feel it at individuals when they come in and they get their swab and they're sick, and some people, some patients even decline treatment. It's so powerful in them that they almost even deny COVID exists in some people, because of what they've read."

Now, you might be wondering: If bad information is so harmful, why does Facebook allow it? And the answer is, it's complicated.

"What they sell is user attention," said Laura Edelson, a misinformation researcher at New York University. "User attention gets sold in the form of advertising. So yes, there is a profit motive."

Edelson's studies have found that misinformation sells: "Misinformation in general gets more shares, comments, [and] likes than factual content. This effect is pretty large. My recent study found that it's a six-fold effect."

Pogue asked, "So, if something is bogus, I'm six times more likely to share it or like it than if it's true?"

"Maybe not you specifically, but in general it will get six times the engagement," Edelson said. "Facebook is a user engagement, a user interaction maximizing machine. That is what Facebook is built to do to: Get users to interact with content as much as possible, as often as possible, for as long as possible."

As you might guess, Facebook is no fan of Edelson's research. So, this summer the company got tough – cutting off access to a group of researchers who were studying misinformation on the platform.

"In August of 2021, they shut down our accounts," Edelson said.

"So, you're not on Facebook anymore?"

"No, I'm not. I have no place to post my dog pictures!"

Now, all social-media companies have a COVID misinformation problem. But Facebook is nearly a trillion-dollar company, with 2.9 billion users a month. Its sheer size makes it special.

"Facebook is the most powerful media apparatus in the world," said New York Times tech columnist Kevin Roose, who has written extensively about misinformation on Facebook.

Pogue asked, "Why don't they just say, 'Oh, sorry, we'll have our amazing artificial intelligence just wipe misinformation off the platform'?"

"Well, it's not as easy as it sounds," Roose replied. "For one, a lot of people disagree about what misinformation is. They have had to walk a very fine line between removing genuinely harmful content from the site, while also, you know, not engaging in what they would consider censorship."

Facebook declined "Sunday Morning"'s request for an interview. But it heartily rejects the notion that it's killing people, or that it's doing nothing to fight COVID misinformation.

The company points out that it has: deleted over 20 million false posts; shut down the accounts of 3,000 repeat offenders; put warning labels on 190 million questionable posts; and promoted factual vaccine information, by building, among other things, a Vaccine Finder feature to help people get their shots.

In a statement, Facebook added, "We're encouraged to see that for people in the U.S. on Facebook, vaccine hesitancy has declined by about 50 percent, and vaccine acceptance is high. But our work is far from finished…"

Finally, as CEO Mark Zuckerberg told CBS News' Gayle King a few weeks ago, maybe the problem isn't Facebook … maybe it's America.

"If this were primarily a question about social media," he said, "I think you'd see that being the effect in all these countries where people use it. But I think that there's that's something unique in our ecosystem here."

All right. So, Facebook says it's doing everything in its power to fight misinformation.

But researchers, journalists and Congress don't believe it. They want Facebook to share its data on how many people are seeing the false posts.

Roose said, "And so, they were basically saying, 'You just have to trust us on that, because we're not going to show you the data.' And there are teams inside Facebook that are working very hard to prevent the spread of misinformation. I think the challenge is that a lot of that data and a lot of that work stays inside the company."

Pogue asked Edelson, "So, let me play Facebook advocate. If I were a Facebook, I might say, 'Wait, wait, wait: We're supposed to reveal all our internal data? You don't ask that of Coca-Cola or Starbucks. Why does that belong to the public?'"

"Well, we do ask it of organizations like banks," she replied. "Banks are incredibly powerful institutions that are a vital part of modern society, but they also have the power to be incredibly harmful, and that's why banks are regulated. And I think we need to move toward something like that for social media companies."

"That's a radical change."

"It would be a radical change," said Edelson, "but I just don't see how the status quo can go on. It is not hyperbolic to say that misinformation on Facebook kills people."

- Mark Zuckerberg says Facebook has removed 18 million posts with COVID misinformation, but won't say how many people viewed them

- 12 state AGs push Facebook and Twitter to crack down on COVID-19 vaccine disinformation

- Lawmakers vow stricter regulations on social media platforms to combat misinformation

Pogue asked Roose, "I'm left wondering where the truth lies. Is it corporate bungling, or is there an evil streak to it all?"

"I think there are some people who think, you know, even inside the company, who think that this thing has gotten sort of out-of-control," Roose replied. "It's sort of their version of a Frankenstein story where they built this platform that billions of people use, and it's just simply gotten a little out of control."

So, how will all this end?

Both parties in Congress seem intent on regulating Facebook; and the company says that it will make more of its data public. For Facebook, it all means more conflict and compromise.

But then, Mark Zuckerberg has been fighting battles over his baby since 2005. Back then, Pogue asked, "Have the lawsuits and the squabbles, has it, has it been a shock to you?"

"Uhm, it's not really shocking, but it is a little, like, upsetting, I guess," Zuckerberg replied. "But I guess if you're making something cool, it's something you have to deal with. For as long as you can, like, maintain that attitude and realize that, like, 'We're doing something that's positive and that's the only reason why anyone cares at all,' then, you know, I mean, we'll get through."

FROM 2005: Four young internet entrepreneurs ("Sunday Morning")

For more info:

- Dr. Adriano Goffi, medical director, Altus Emergency Center, Lumberton, Texas

- Laura Edelson, Tandon School of Engineering, New York University

- Kevin Roose, The New York Times

- facebook.com

Story produced by Mark Hudspeth. Editor: Remington Korper.

See also:

- "60 Minutes" preview: The Facebook whistleblower (October 3)