Facebook users will soon be able to see if they interacted with Russian trolls

Facebook plans to introduce a new tool that will allow users to see if they were among the millions who interacted with certain pages created by a Russian troll farm associated with fake campaign ads, the company announced Wednesday.

"A few weeks ago, we shared our plans to increase the transparency of advertising on Facebook. This is part of our ongoing effort to protect our platforms and the people who use them from bad actors who try to undermine our democracy," the company said in a statement.

The company said in testimony to the Senate Judiciary Committee in October that over the course of three years, approximately 29 million people were "delivered" or "served" content from pages related to the Internet Research Agency, the Russian troll farm, The company believes as many as 126 million people could have seen that content.

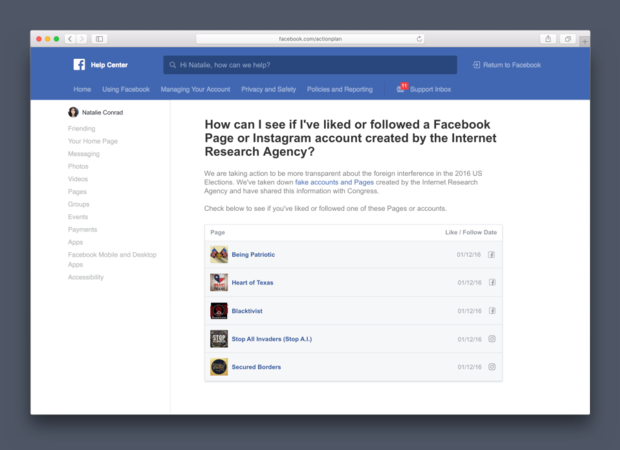

"We will soon be creating a portal to enable people on Facebook to learn which of the Internet Research Agency Facebook Pages or Instagram accounts they may have liked or followed between January 2015 and August 2017," Facebook said in its statement. "This tool will be available for use by the end of the year in the Facebook Help Center."

A Facebook spokesperson was not able to tell CBS News if the company has an exact release date planned for the portal. Facebook did not say if it plans to update the portal in the future if it discovers more Internet Research Agency accounts or similar schemes run by other organizations.

The company's statement included a screenshot of the tool, which highlights several Russian-linked accounts that appeared to pose as American users while posting divisive material during the 2016 presidential campaign.

"It is important that people understand how foreign actors tried to sow division and mistrust using Facebook before and after the 2016 US election," the company said in its statement. "That's why as we have discovered information, we have continually come forward to share it publicly and have provided it to congressional investigators. And it's also why we're building the tool we are announcing today."

In the weeks leading up to the 2016 U.S. election, Facebook shut down 5.8 million fake accounts in the United States. The accounts were removed in October 2016, but the company's automated tool did not yet reflect today's understanding that masses of fake accounts were being used to advance social and political causes. The company credits updates geared toward looking for political and social-focused accounts for the 30,000 accounts it disabled before this year's French election and tens of thousands of others removed before the German election.

Before the 2016 election in the U.S., the company's security team also reported to U.S. law enforcement officials indications that a group called APT28 had targeted employees of American political parties. U.S. law enforcement believes APT28 is connected with Russian intelligence operations.

Throughout 2017, Facebook has responded to a series of revelations related to fake users and flawed metrics on the social network.

In September, researchers exposed a security loophole that allowed at least a million Facebook accounts, both real and fake, to generate at least 100 million "likes" and comments as part of "a thriving ecosystem of large-scale reputation manipulation." CBS News tested out the network and confirmed its ability to quickly generate likes. Facebook posts that quickly receive a lot of likes are more likely to be placed higher in other people's feeds, meaning users buoyed by fake likes can ultimately generate significantly more real attention and influence.

The Wall Street Journal first reported details of the October 2016 account purge. In April of this year, Facebook conducted another purge, removing tens of thousands of fake accounts that had liked media pages as part of a wider strategy to appear real while spamming users, and it removed 30,000 accounts allegedly tied to Russian influence operations in the run-up to the French national election in May.

In 2016, the company announced that it undercounted the traffic of some publishers and for more than a year had over-reported time spent on Facebook's Instant Articles platform. It acknowledged issues affecting a range of metrics -- including ad reach, streaming reactions, likes and shares, and admitted that for two years it reported to advertisers overestimated figures for the average time users spent watching videos on its platform.

The disclosures in 2016 led to a putative class action lawsuit, which was filed by a Facebook investor in January.

Got news tips about digital privacy, social media or online marketing? Email this reporter at KatesG@cbsnews.com, or for encrypting messaging, grahamkates@protonmail.com (PGP fingerprint: 4b97 34aa d2c0 a35d a498 3cea 6279 22f8 eee8 4e24).