Cleveland murder raises questions about violent videos on Facebook

Facebook is under fire over how it monitors and removes violent videos on the site. As authorities fanned out across five states Monday in a massive manhunt for a man who uploaded to Facebook a video in which he appeared to shoot and kill another man, the social media site is once again facing questions about how it polices violent content, having taken more than three hours to remove the offending video.

The video, posted Sunday, appears to show suspect Steve Stephens approaching the victim, 74-year-old Robert Godwin Sr., at random, before shooting and killing Godwin. By the time the video was removed from the platform, word about the gruesome clip spread across the nation.

Experts say the murder is just the latest in a series of incidents that call into question how the social media site monitors the growing flow of live and uploaded videos posted to the site. Facebook watchers expect to hear from founder Mark Zuckerberg on some of the platform’s more high-profile errors at its annual developers’ conference, F8, which kicks off this week.

Kathleen Stansberry, an assistant professor of public relations and social media at Cleveland State University, said Facebook’s users typically need to flag a video before the site’s employees screen it for content that violates the terms of service, which ban most violent imagery.

“They largely rely on users to police content, so people can report offensive material, and things that go against Facebook’s terms and conditions,” Stansberry said. “It’s sort of relying on general humanity to flag things.”

Karen North is professor of social media at the Annenberg School at University of Southern California.

“There is a big question of why didn’t any one flag this and take it down for so long?” she asked CBS News.

That’s a question Facebook was not willing to answer today.

Facebook executive Desiree Motamedi -- who is in San Jose for F8 -- told CBS News’ John Blackstone she couldn’t comment on that.

“We want to show the new tech across Messanger, Oculus, virtual reality, augmented reality,” Motamedi said.

Like other sites that encourage users to upload videos and produce live streams, such as YouTube, Facebook says it employs thousands of people who examine content, but -- with the exception of certain widely-viewed live videos -- it does not proactively monitor posts unless they’ve been flagged by a user.

In a statement released Monday afternoon, Facebook said is taking another look at its system for flagging videos.

As a result of this terrible series of events, we are reviewing our reporting flows to be sure people can report videos and other material that violates our standards as easily and quickly as possible. In this case, we did not receive a report about the first video, and we only received a report about the second video -- containing the shooting -- more than an hour and 45 minutes after it was posted. We received reports about the third video, containing the man’s live confession, only after it had ended.

We disabled the suspect’s account within 23 minutes of receiving the first report about the murder video, and two hours after receiving a report of any kind. But we know we need to do better.

In addition to improving our reporting flows, we are constantly exploring ways that new technologies can help us make sure Facebook is a safe environment. Artificial intelligence, for example, plays an important part in this work, helping us prevent the videos from being reshared in their entirety. (People are still able to share portions of the videos in order to condemn them or for public awareness, as many news outlets are doing in reporting the story online and on television). We are also working on improving our review processes. Currently, thousands of people around the world review the millions of items that are reported to us every week in more than 40 languages. We prioritize reports with serious safety implications for our community, and are working on making that review process go even faster.

The site first began to tweak its video monitoring policies in July 2016, after a Minnesota man, Philando Castile, was filmed by his girlfriend as he died after being shot by a police officer. Since then, similarly gruesome videos have appeared on the platform in increasing numbers.

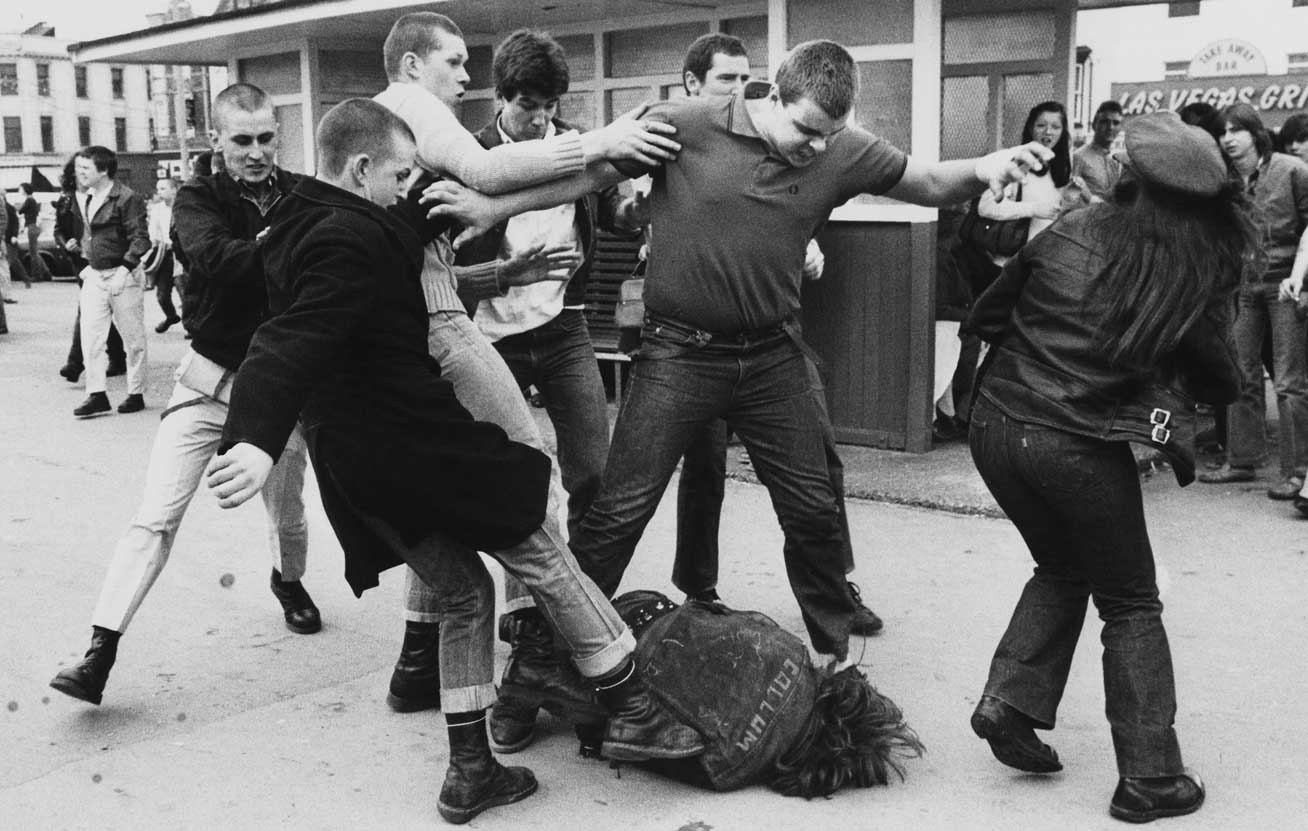

- In January, four people who were later charged with hate crimes were watched by horrified viewers in Chicago as they appeared to beat and torture a mentally disabled man.

- In February, also in Chicago, a man and a toddler were shot and killed while in a car with the boy’s mom. That was also caught on Facebook Live.

- That same month, an alleged gang rape was live streamed in Sweden.

- In March, a mom tracked down the Chicago chief of police to tell him she witnessed her daughter’s sexual assault on Facebook Live. Dozens reportedly watched that video as it happened.

Jennifer Grygiel, an assistant professor of communications at Syracuse University’s S.I. Newhouse School of Public Communications, said the violent content raises serious questions about whether it’s appropriate for the site to wait until a user has flagged it to intervene. Grygiel says the site needs to implement new age restrictions for Facebook Live videos and other policy changes.

“The content that’s uploaded to Facebook is monitored by end users, which includes children. And I’m saying, ‘No, Facebook, children cannot be your moderators,” Grygiel said. “Facebook has more of a responsibility to the public, and that means hiring more humans to monitor this content.”

Grygiel noted that the site doesn’t release information about who viewed any of the violent scenes played out on Facebook Live or uploaded video. The company sees its future as tied to video content, something Facebook CEO Mark Zuckerberg has acknowledged frequently.

“People are creating and sharing more video, and we think it’s pretty clear that video is only going to become more important. So that’s why we’re prioritizing putting video first across our family of apps,” Zuckerberg said during his third quarter earnings call with investors in November.

As the company fulfills Zuckerberg’s promise to expand video, the problem of violent content could have an impact on the company’s bottom line, Grygiel said. She pointed to other recent scandals, including an investigation into Marines who shared without permission naked photos of women, some of whom were fellow marines.

“It’s called corporate social responsibility -- it’s when public outcry raises the bar beyond current regulatory requirements,” Grygiel said. “We as a public need to essentially demand that they clean up their platform.”